Interpreters III : Building a Just-In-Time (JIT) Compiler.

At SourceProbe we’re building awesome code analysis and navigation tools. Many parts of our stack use interpreter programs, both for understanding the target source code and for implementing our query language. We’re very interested in making these run as quickly as possible.

Previously we looked at a basic interpreter. This time we’ll look at improving our interpreter by eliminating the biggest overhead: the interpreter loop itself.

The interpreter overhead

Last time we saw that we could implement a basic programming language interpreter with a simple loop.

Here’s the performance we saw last time:

| Language & Link to code | Run time (min,sec) | Multiple vs C++ (lower is better) |

|---|---|---|

| C++, default | 3m51s | 1.0 |

| C++ (O3) | 36.95s | 0.16 |

| Python3 | 30m52s | 8.01 |

| ChickenScheme | 9m36s | 2.49 |

| SBCL | 2m35s | 0.67 |

Though our optimized C++ interpreter was very fast compared to our other implementations, we can actually make it even faster. The insight is that for any instructions needed to implement the target language, the cpu has to perform several others just to figure out what needs to run next. The overhead of the interpreter itself is slowing us down.

A Just-In-Time Compiler

One way to reduce the overhead of the interpreter is to ammortize its cost over several instructions, rather than using the interpreter for each individual language instruction.

Consider the simple program:

++++[-]Which sets up a counter, then loops until it has decreased that value down to zero. Right now, the interpreter makes a comparison against each symbol to decide what to do. And yet, we have some limited ability to predict ahead what the interpreter needs to do. Outside of branching, we know that the interpreter is going to simply execute all the instructions in order.

With the interpreter, we’re essentially the following for each + instruction:

if (pc > program.length())

return;

if (program[pc] == '+')

tape[i]++;Where we’d like to just execute:

tape[i]++;For each run of instructions without branching, we can predict series of future instructions. This chunk of code is often called a basic block. If we can build a more direct block of code for each basic block, then we can run these instead of running through the interpreter each time. This way we spread or ammortize the interpreter overhead over each instruction of the basic block.

This “more direct code” will simply be native instructions for our machine. Though this makes our code specific to a given machine architecture, the speedup can be worth it.

There’s another, perhaps more intuitive way to think about this. Our CPU is already in the business of looking at instructions in memory, and acting differently based on the observed instruction. Implementing this in software is somewhat redundant, and we can instead make use of the CPU’s own fetch-decode-execute pipeline to run our language.

This technique is known as Just-In-Time compilation. Rather than compiling our program ahead of time, we can essentially compile the program before executing any new code or basic blocks. We can also reuse the results for subsequent executions of the same block.

A JIT interpreter loop

Our new JIT loop looks something like the following :

# we can only jit-compile non-branch instructions

def jittable(symbol):

return symbol in '+-<>.'

if pc in block_cache:

execute block_cache[pc]

else if jittable(program[pc]):

compile_block

execute_block

else if program[pc] == '[':

# jump code

else if program[pc] == ']':

# jump codeSince we’re executing code partially via the JIT system, and partially via the interpreter, we’ll need to be sure to keep our pc variable updated. We’ll want to track how many language-nstructions were in the basic block, along with the native code, so that we can update pc after running each block.

Implementation notes

Note there are many ways to design a JIT, and this is just one. We’ve opted to have a separate assembly language file, where we implement code per language-instruction. We’ll have a label at the start and end of each section of code, so that we can refer to these addresses in our C++ code.

When the C++ code needs to generate a basic block, it will look for the assembled native code for that instruction, and concatenate this with all the other instructions in that block.

Is it faster?

After running our basic block JIT interpreter, we can run the mandelbrot benchmark once more to see how it performs:

$ time ./bf.jit.o ../mandel.bf > /dev/null

real 0m55.568s

user 0m55.541s

sys 0m0.012sWhile the code outperforms unoptimized C++, its slower than our original interpreter. This is a bit surprising, so lets capture a profile with perf to better understand this result.

$ g++ -g *.cc *.s -o bf.jit.o

# run these at the same time:

$ sudo record -F99 --call-graph dwarf -p $(pidof bf.jit.o) sleep 10

$ ./bf.jit.o ../mandel.bf

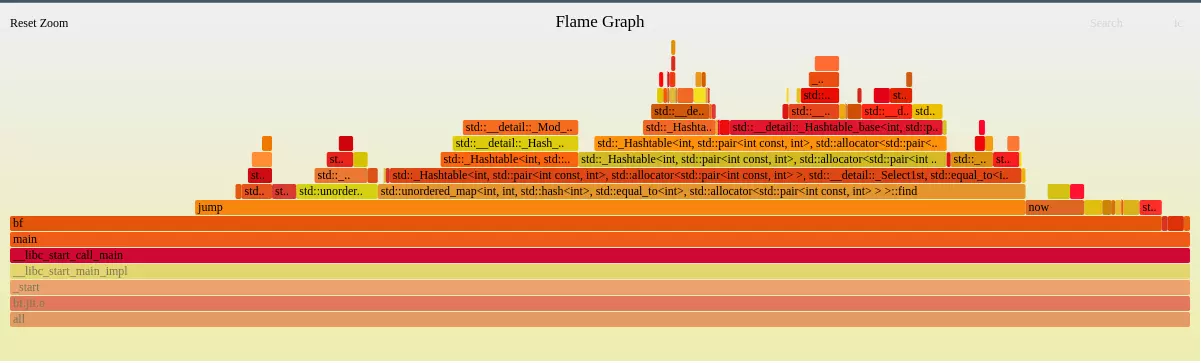

Feel free to click on the screenshot above to dive into the flamegraph.

We notice here that nearly all the time spent in our interpreter is spent executing jumps.

Though we sped up execution of basic block code, we made it harder for the cpu to predict future instructions. A basic block ends up jumping to some address stored in our basic block hashmap, which is opaque to the cpu.

Though somewhat disappointing, this also suggests one more optimization.

JIT compiling branch code.

Typically, a JIT would use the knowledge of the sequence of instructions

to generate optimized code. For example, in our case we could flatten a series

of + instructions into an add.

However, we’ve chosen to omit these optimizations since 1) they’re language/architecture specific, but primarily 2) we’ve found that most time is spent branching anyway.

These optimizations are hard to do around branches. We dont have that concern, so we’ll try to map the branches into native code as well.

One complication here is the x86-64 encoding of jump instructions. There are types of jump, but we’ll use one that is encoded with a 32 bit offset relative to the current instruction pointer. In order to write a jump instruction into memory, we’ll need to already know where the corresponding native code for the other instruction is.

For backward jumps, this is not an issue since the JIT compiler will already have generated the code. However for forward jumps, this hasn’t yet been accomplished.

We’ll solve this by making two passes when generating code. During the first pass, the JIT will write instructions and keep track of where the native code for each language-instruction ends up. Then we’ll make another pass to fixup the jump instruction offsets.

And now, our new “interpreter” looks like:

compile_block(0)

execute_block(0)The entire program will be encoded in this single block of native code.

And how does it perform?

real 0m18.194s

user 0m18.169s

sys 0m0.020s| Language & Link to code | Run time (min,sec) | Multiple vs Default build (lower is better) |

|---|---|---|

| C++, default | 3m51s | 1.0 |

| C++ (O3) | 36.95s | 0.16 |

| C++ (JIT) | 18.19s | 0.079 |

Our BasicBlock + Branches JIT is now 2x as fast on the mandelbrot program as the optimized c++ code for our original interpreter.

Next up, an ahead-of-time compiler.

The JIT is much faster than original interpreter. But we can make our language faster still. One approach would be to investigate in inter-instruction optimizations. This can be quite effective. Though instead we’ll use explore another technique entirely.

This next article isn’t ready yet. Join our newsletter to be notified when the article is published.

Join our newsletter to get notified of new articles and product updates. Unsubscribe at any time.